- #Global Issues

- #Security & Defense

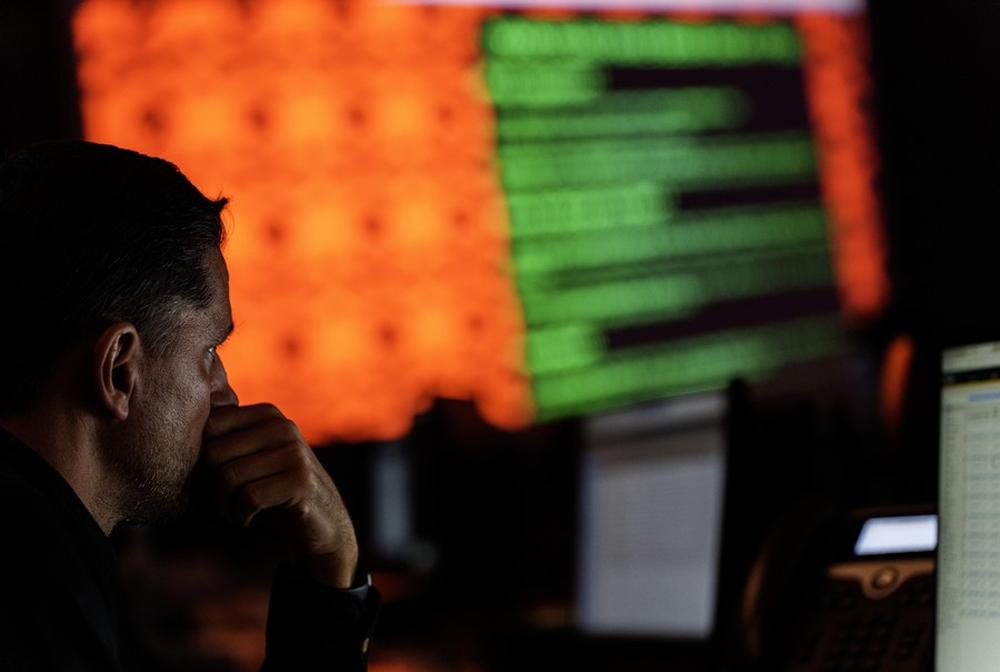

- #Technology & Cybersecurity

▶ AI in Warfare: The Habsora AI system highlights the growing integration of artificial intelligence into military operations, serving as a decision-support tool to enhance targeting efficiency while raising concerns about potential algorithmic errors and ethical implications in wartime decision-making.

▶ Strategic Transformations: AI is driving a shift from network-centric to decision-centric warfare, emphasizing rapid information analysis and adaptive strategies, exemplified by initiatives like the U.S. Replicator Initiative, which promotes smaller, autonomous systems over large platforms.

▶ Challenges of Trust and Autonomy: The military application of AI introduces trust issues regarding algorithmic biases, error risks, and the extent of human oversight, necessitating a balance between leveraging AI's capabilities and managing its uncertainties amidst great power competition.

The deployment of the Habsora AI system amid the ongoing Israel-Hamas conflict signals a pivotal moment in the evolution of warfare: artificial intelligence has now moved from a novel concept to a practical tool in military operations. According to the Israel Defense Forces (IDF), Habsora AI carried out targeting operations against Hamas militants, allegedly contributing to over 12,000 strikes against identified targets in approximately 27 days. The Habsora AI system functions as a decision-support tool, analyzing comprehensive data accumulated over years by Israel, including residential information on 30,000-40,000 suspected Hamas militants, drone footage, satellite surveillance data, and intercepted communications to predict their locations. While Israel claims the Habsora AI system can reduce civilian casualties during war, the system's reliance on algorithms implies there is potential for errors at various stages of the algorithm’s process. Ultimately, whether we can trust the autonomous operation of algorithms without human intervention in wartime decision-making requires further discussion and research.

The most extreme scenario related to the military use of AI is its application in nuclear weapons systems. In 2022, U.S. President Biden declared that decisions regarding the launch of nuclear weapons would remain under human control, but China and Russia have made no such pledge. International agreements on the military application of AI are still in the discussion phase among major powers.

Given that the current great power competition involves nuclear-armed states, the rise of AI as a factor in decision making raises particular concern. Since today’s AI mostly enhances the capabilities of automated systems, it does not yet pose a direct threat or exacerbate the security dilemma between nations. Instead, its role can be understood better as a multiplier or enabler for existing automated systems.

However, the implications of AI implemented in a military context have been widely discussed, particularly with respect to the reduction in decision-making time that AI enhancement can offer, which may incentivize and enable preemptive strikes. This, in turn, could make crisis escalation control more challenging. On the other hand, other uses of AI could actually have a deterring effect. Even if AI speeds up decision-making through rapid data processing in the preemptive strike side of the equation, “deterrence by deception” may still be an effective roadblock to countries considering a first strike. For example, generative AI can spread disinformation, influencing the adversary’s decision-making process and potentially delaying preemptive strike decisions.

Nevertheless, the military application of AI has the potential to significantly transform the conduct and nature of warfare. A prominent area of discussion is the shift towards decision-centric warfare, driven by AI-based command, control, communications, surveillance, and reconnaissance (C4ISR) systems. Current network-centric warfare seeks to achieve information superiority through the networking of geographically dispersed weapons systems and units. While technological improvements in weaponry and platforms are important, network-centric warfare emphasizes creating a network structure for better decision-making. In contrast, decision-centric warfare focuses on quickly analyzing vast amounts of information to make decisions, based on the premise that a quantitative advantage in information does not necessarily lead to qualitatively superior decisions. AI helps analyze information related to cognitive domains and aids decision-making while disrupting or undermining the adversary’s own decision making to achieve victory in conflict. Mosaic Warfare is a representative example of decision-centric warfare, characterized by rapid decision-making, flexible reorganization of dispersed forces through AI, and increased uncertainty for the adversary. To achieve this, the U.S. is shifting from large, expensive war platforms to smaller, autonomous weapons systems, exemplified by the Replicator Initiative recently unveiled by the U.S. Department of Defense. This strategy has been proposed as part of the "Hellscape Strategy" tailored to the strategic environment of the Taiwan Strait.

The complicating factor is that China and Russia are also envisioning their own strategies, such as “System Destruction Warfare” and “New Generation Warfare,” respectively. In turn, the dynamics and fundamentals of warfighting are expected to evolve rapidly in the near future.

Amidst these shifts and the increasing integration of AI into conflict systems and decision making, the critical issue is whether trust can be established between humans and AI. These developments raise the question of whether we can tolerate the uncertainty of how AI will operate in specific situations. The algorithms that power AI are not flawless; they can carry biases that influence data analysis and may lead to errors. Ultimately, this challenge of trust ties into the question of how much autonomy humans are willing to give AI.

In summary, AI is more likely to serve as a force multiplier, amplifying the capabilities and impact of new technologies, rather than being inherently destructive on its own. The intentions of the actors utilizing AI are also crucial. The current great power competition, particularly with revisionist states, raises concerns, and AI could become a major area of competition, potentially intensifying existing rivalries. Granting AI unchecked autonomy in the context of great power competition could pose risks to all of humanity, making it essential to prioritize trust-building in the military application of AI.

Dr. Kuyoun CHUNG is an Associate Professor of Political Science at the Kangwon National University. Her research focuses on US foreign policy, Korean peninsula, as well as security issues in the Indo-Pacific, including alliance politics, regional security architecture, nuclear politics, maritime security, and emerging technologies. She received her B.A. and M.A. from Korea University, and Ph.D. in Political Science from the University of California, Los Angeles. Previously, she was a lecturer in the Department of Political Science at UCLA, a visiting professor at the Korea National Diplomatic Academy, and a research fellow at the Korea Institute for National Unification. Currently She currently serves as a member of the policy advisory committee of Ministry of Defense, the Republic of Korea Navy, National Security Council under the Office of the President.